Red Hat?OpenShift is an enterprise-grade Kubernetes platform for managing Kubernetes clusters at scale, developed and supported by Red Hat. It offers a path to?transform how organizations manage complex infrastructures on-premises as well?as across the hybrid cloud.

Red Hat?OpenShift is an enterprise-grade Kubernetes platform for managing Kubernetes clusters at scale, developed and supported by Red Hat. It offers a path to?transform how organizations manage complex infrastructures on-premises as well?as across the hybrid cloud.

AI computing brings far-reaching transformations to modern business, including?fraud detection in financial services and recommendations engines for entertainment and e-commerce. A?2018 CTA Market Research study shows?that?companies using AI technology at the core of their corporate strategy boosted their profit margin by 15% compared to companies not embracing AI.

The responsibility of providing infrastructure for these new, massively compute-intensive workload falls on the shoulders of IT.?Many organizations struggle with the complexity, time, and cost associated with deploying an IT-approved infrastructure for AI. Up to 40% of organizations wishing to deploy AI see infrastructure as a major?blocker.?Deploying a cluster for AI workloads often raises questions in areas of network topology and sizing of compute and storage resources.?NVIDIA has therefore created reference architectures for typical applications to alleviate?the guesswork.?

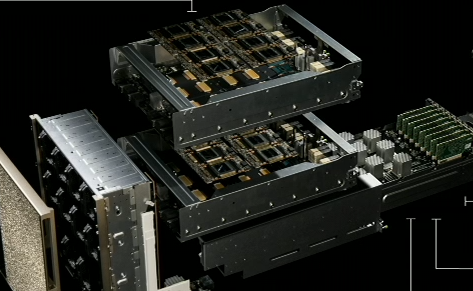

The NVIDIA DGX POD, for example, consists?of multiple DGX-1 systems and storage from named vendors. NVIDIA?developed DGX POD?from the experience of deploying thousands of nodes of leading-edge accelerated computing deployed in the world’s largest research and enterprise environments.?Ensuring AI success at-scale, however, necessitates a software platform, such as KubernetesTM, that ensures manageability of the AI infrastructure.

Red Hat OpenShift 4 is a major release incorporating?technology from Red Hat’s acquisition of CoreOS. At its core (no pun intended) are immutable system images based on Red Hat Enterprise Linux CoreOS (RHCOS). It follows a new paradigm where installations are never modified or updated after they are deployed but replaced with updated version of the entire system image. This provides higher reliability and consistency with a more predictable deployment process.

This post takes?a first?look at OpenShift 4?and GPU Operators for AI workloads on NVIDIA reference architectures. We base this post on a software preview requiring some manual steps, which will be resolved in the final version.

Installing and running OpenShift requires a Red Hat account and?additional?subscriptions. The official installation instructions are available under?Installing a cluster on bare metal.

Test Setup Overview

The minimum configuration for?an OpenShift cluster consists of three master?nodes and two worker?nodes?(also called compute?nodes). Initial setup of the cluster requires an additional bootstrap?node,?which?can be removed or repurposed during the installation process. The requirement of having three master nodes ensures high-availability (avoiding split-brain situations) and allows for uninterrupted upgrades of the master nodes.

We used?virtual machines on a single x86 machine for the bootstrap and master nodes?and?two DGX-1 systems for?the compute nodes (bare-metal). A load-balancer ran in a separate VM to distribute requests to the nodes. Using round-robin DNS might have also worked, but getting the configurations correct turned out to be tricky. The virsh?network needs to be set in bridged mode instead of NAT so that the nodes can communicate with each other (see Libvirt Networking?for more details).

Red Hat OpenShift 4 does not yet provide a fully automated installation method for bare-metal systems but requires external infrastructure?for provisioning and performing the initial installation (the OpenShift documentation refers to it as User Provisioned Infrastructure – UPI). In our case, we used?the x86 server for provisioning and for booting nodes through PXE boot. Once installed, nodes perform upgrades automatically.

Creating the Systems Configurations

Red Hat Enterprise Linux CoreOS uses Ignition for the system configuration. Ignition provides a similar functionality to cloud-init and allows to configure systems during the first boot.

The Ignition files are generated by the OpenShift installer from the?configuration file?install-config.yaml. It?describes the cluster through?various parameters?and also includes an SSH key and credentials for pulling containers from the Red Hat container repository. The OpenShift tools and the Pull Secret can be downloaded from the OpenShift Start Page.

apiVersion: v1 baseDomain: nvidia.com compute: - hyperthreading: Enabled name: worker platform: {} replicas: 2 controlPlane: hyperthreading: Enabled name: master platform: {} replicas: 3 metadata: creationTimestamp: null name: dgxpod networking: clusterNetwork: - cidr: 10.128.0.0/14 hostPrefix: 23 networkType: OpenShiftSDN machineCIDR: 10.0.0.0/16 serviceNetwork: - 172.30.0.0/16 platform: none: {} pullSecret: '{"auths": ….}' sshKey: ssh-rsa ...

The parameters baseDomain?and metadata:name?form the domain name of?the cluster?(dgxpod.nvidia.com). The network parameters describe the internal network of the OpenShift cluster and only need to be modified if they?conflict with the external?network.

The following commands create the Ignition files for the nodes and the authentication file for the cluster. Because?these commands delete install-config.yam, we kept a copy of it outside the ignition?directory. The generated authentication file (ignition/auth/kubeconfig) should be renamed and copied to $USERHOME/.kube/config

mkdir ignition cp install-config.yaml ignition openshift-install --dir ignition create ignition-configs

DHCP and PXE Boot

Setting up PXE boot is certainly not an easy feat;?providing detailed instructions is beyond the scope of this post. Readers should have knowledge of setting up PXE booting and DHCP. The following snippets only cover the DNS configuration for dnsmasq.

The address?directive in the dnsmasq configuration file allows for using a wildcard to resolve any *.apps request with the address of the load balancer. The SRV entries allow the cluster to access the etcd service.

# Add hosts file addn-hosts=/etc/hosts.dnsmasq # Forward all *.apps.dgxpod.nvidia.com to the load balancer address=/apps.dgxpod.nvidia.com/10.33.3.54/ # SRV DNS records srv-host=_etcd-server-ssl._tcp.dgxpod.nvidia.com,etcd-0.dgxpod.nvidia.com,2380,0,10 srv-host=_etcd-server-ssl._tcp.dgxpod.nvidia.com,etcd-1.dgxpod.nvidia.com,2380,0,10 srv-host=_etcd-server-ssl._tcp.dgxpod.nvidia.com,etcd-2.dgxpod.nvidia.com,2380,0,10

The corresponding?/etc/hosts.dnsmasq?file lists the IP address and host names. Note that OpenShift requires that the first entry for each host to be the node name, such as master-0. The api-int?and api?entries point to the load balancer.

10.33.3.44 worker-0.dgxpod.nvidia.com 10.33.3.46 worker-1.dgxpod.nvidia.com 10.33.3.50 master-0.dgxpod.nvidia.com etcd-0.dgxpod.nvidia.com 10.33.3.51 master-1.dgxpod.nvidia.com etcd-1.dgxpod.nvidia.com 10.33.3.52 master-2.dgxpod.nvidia.com etcd-2.dgxpod.nvidia.com 10.33.3.53 bootstrap.dgxpod.nvidia.com 10.33.3.54 api-int.dgxpod.nvidia.com api.dgxpod.nvidia.com

The following pxelinux.cfg?file is an example of a non-EFI PXE boot configuration. It defines the kernel and initial ramdisk, and provides additional command line arguments. Note that the arguments prefixed with coreos are passed to the CoreOS installer.

DEFAULT rhcos PROMPT 0 TIMEOUT 0 LABEL rhcos kernel rhcos/rhcos-410.8.20190425.1-installer-kernel initrd rhcos/rhcos-410.8.20190425.1-installer-initramfs.img append ip=dhcp rd.neednet=1 console=tty0 console=ttyS0 coreos.inst=yes coreos.inst.install_dev=vda coreos.inst.image_url=http://10.33.3.18/rhcos/rhcos-410.8.20190412.1-metal-bios.raw coreos.inst.ignition_url=http://10.33.3.18/rhcos/ignition/master.ign

Kernel, initramfs, and raw images are available from the OpenShift Mirror. The installation instructions, Installing a cluster on bare metal, provide the latest version and download path. The image files and the ignition configurations from the previous step should be copied to the http directory.?Ensure you?have the proper http SELinux label set for all these files.?Note that the DGX-1 systems only support UEFI for network booting?and thus?requires a different set of files.

Load Balancer

The Load balancer handles distributing requests across the online nodes. We ran and instance of CentOS in a separate virtual machine and used HAProxy with the following configuration.

listen ingress-http bind *:80 mode tcp server worker-0 worker-0.dgxpod.nvidia.com:80 check server worker-1 worker-1.dgxpod.nvidia.com:80 check listen ingress-https bind *:443 mode tcp server worker-0 worker-0.dgxpod.nvidia.com:443 check server worker-1 worker-1.dgxpod.nvidia.com:443 check listen api bind *:6443 mode tcp server bootstrap bootstrap.dgxpod.nvidia.com:6443 check server master-0 master-0.dgxpod.nvidia.com:6443 check server master-1 master-1.dgxpod.nvidia.com:6443 check server master-2 master-2.dgxpod.nvidia.com:6443 check listen machine-config-server bind *:22623 mode tcp server bootstrap bootstrap.dgxpod.nvidia.com:22623 check server master-0 master-0.dgxpod.nvidia.com:22623 check server master-1 master-1.dgxpod.nvidia.com:22623 check server master-2 master-2.dgxpod.nvidia.com:22623 check

Creating the Bootstrap and Master Nodes

The virt-install?command allows for easy deployment of the bootstrap and master nodes. NODE-NAME?should be replaced with the actual name of the node?and NODE-MAC?with the corresponding network address (MAC) for the node.

virt-install --connect qemu:///system --name --ram 8192 --vcpus 4 --os-type=linux --os-variant=virtio26 --disk path=/var/lib/libvirt/images/.qcow2,device=disk,bus=virtio,format=qcow2,size=20 --pxe --network bridge=virbr0 -m --graphics vnc,listen=0.0.0.0 --noautoconsole

After the initial installation completes, the VM exits and must be restarted manually. The state of the VMs?can be monitored using sudo virsh list?which prints all?active VMs. Once they disappear, they can be restarted with virsh start <NODE-NAME>.?In the prerelease version of OpenShift, resolv.conf?wasn’t updated and required rebooting the nodes one more time using virsh reset for each node to reboot the nodes again.

The entire installation process of the cluster should take less?than an hour, assuming correct settings and configurations. The initial bootstrapping progress can be monitored using?the following command.

openshift-install --dir wait-for bootstrap-complete

The bootstrap node can be deleted when the bootstrapping completes. Next, wait for the entire installation process to complete, use:

openshift-install --dir wait-for install-complete

The pre-release version of the?installer reported errors at times but eventually completed successfully. Because it also didn’t automatically approve pending certifications (CSR), we added the following?crontab entry that ran every 10?minutes.

*/10 * * * * dgxuser oc get csr -ojson | jq -r '.items[] | select(.status == {} ) | .metadata.name' | xargs oc adm certificate approve

GPU Support

NVIDIA and Red Hat continue to work together to provide a straightforward mechanism for deploying and managing GPU drivers. The Node Feature Discovery Operator?(NFD) and GPU Operator build the foundation for this improved mechanism and will be available from the Red Hat Operator Hub. This allows having and optimized software stack deployed at all times. The following instructions describe the manual steps to install these operators.

The NFD?detects hardware features and configurations in the OpenShift cluster, such as CPU type and extensions?or, in our case, NVIDIA GPUs.

git clone h?ttps://github.com/openshift/cluster-nfd-operator cd cluster-nfd-operator/manifests oc create -f . oc create -f cr/nfd_cr.yaml

After the installation?completes, the NVIDIA GPU should show up in the feature list for the worker nodes;?the final software will provide a human readable name instead of the vendor ID (0x10de for NVIDIA).

oc describe node worker-0|grep 10de feature.node.kubernetes.io/pci-10de.present=true

The?Special Resource Operator (SRO) provides a template for accelerated cards. It activates when a component is detected and installs the correct drivers and other software components.

The development version of the Special Resource Operator already includes support for NVIDIA GPUs and will merge into the NVIDIA GPU Operator when it becomes available. It manages the installation process of all required NVIDIA drivers and software components.

git clone https://github.com/zvonkok/special-resource-operator cd special-resource-operator/manifests oc create -f . cd cr oc create -f sro_cr_sched_none.yaml

The following nvidia-smi.yaml?file defines a Kubernetes Pod that can be used for a quick validation. It allocates a single GPU and runs?the nvidia-smi?command.

apiVersion: v1 kind: Pod metadata: name: nvidia-smi spec: containers: - image: nvidia/cuda name: nvidia-smi command: [ nvidia-smi ] resources: limits: nvidia.com/gpu: 1 requests: nvidia.com/gpu: 1

The oc create -f nvidia-smi.yaml?script creates and runs the pod. To monitor the progress of the pod creation use?oc describe pod nvidia-smi.?When completed, the output of the nvidia-smi command can be viewed with oc logs nvidia-smi:

+-----------------------------------------------------------------------------+ | NVIDIA-SMI 418.56 Driver Version: 418.56 CUDA Version: 10.1 | |-------------------------------+----------------------+----------------------+ | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. | |===============================+======================+======================| | 0 Tesla V100-SXM2... On | 00000000:86:00.0 Off | 0 | | N/A 36C P0 41W / 300W | 0MiB / 16130MiB | 1% Default | +-------------------------------+----------------------+----------------------+ +-----------------------------------------------------------------------------+ | Processes: GPU Memory | | GPU PID Type Process name Usage | |=============================================================================| | No running processes found | +-----------------------------------------------------------------------------+

Finally, the pod can be deleted with?oc delete pod nvidia-smi.

Conclusion

Introducing Operators and an immutable infrastructure built on top of Red Hat Enterprise Linux CoreOS brings exciting improvements to OpenShift 4. It simplifies deploying and managing an optimized software stack for multi-node large scale GPU-accelerated data centers. These new features look pretty solid now and we think customers will be pleased to use them going forward.