NVIDIA NeMo Guardrails for Developers

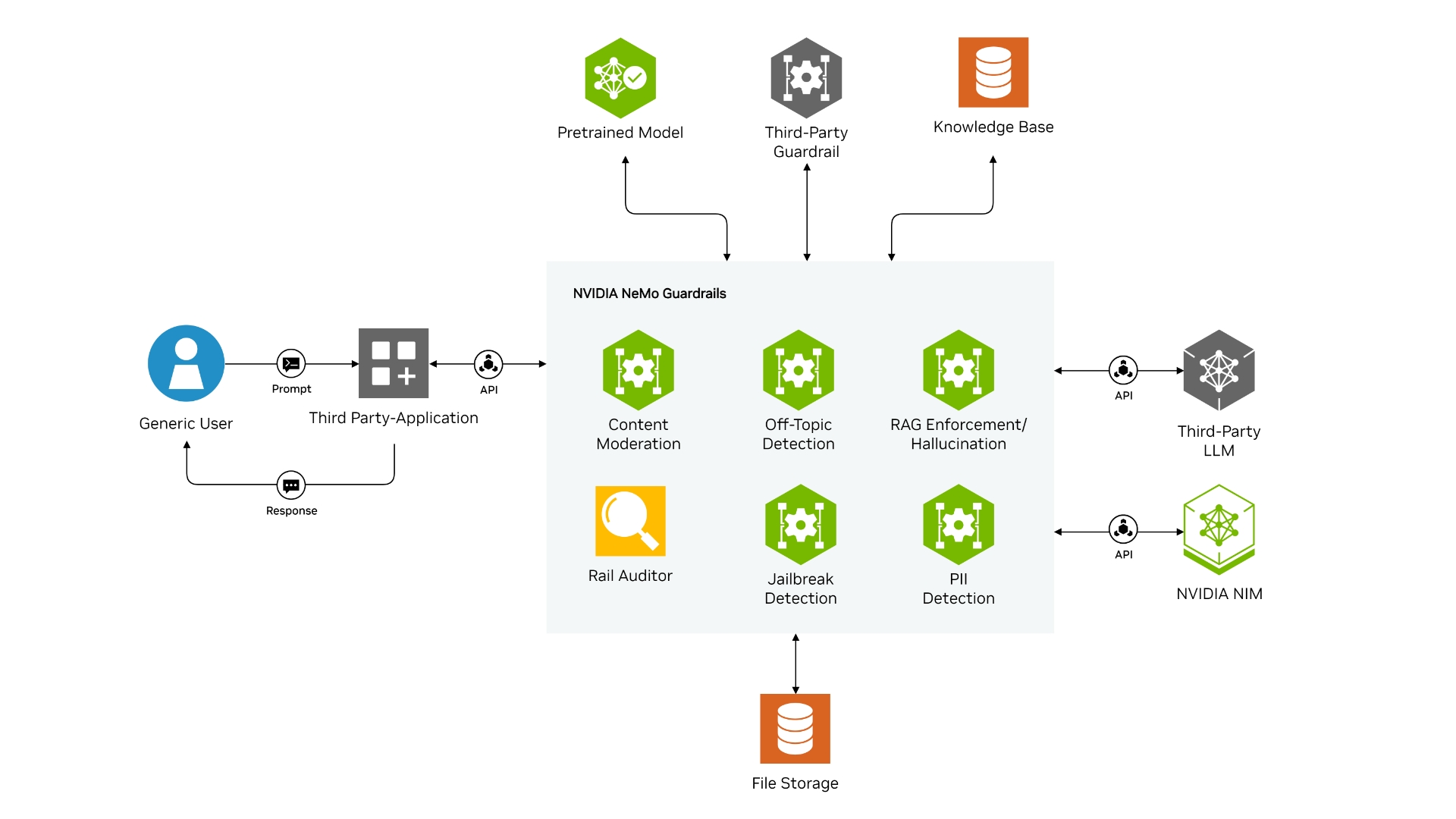

NVIDIA NeMo? Guardrails simplifies scalable AI guardrail orchestration for safeguarding generative AI applications. With NeMo Guardrails, you can define, orchestrate, and enforce multiple AI guardrails to ensure the safety, security, accuracy, and topical relevance of large language model (LLM) interactions. Extensible and customizable, it is easy to use with popular gen AI development frameworks, including LangChain and LlamaIndex, along with a growing ecosystem of AI safety models, rails, and observability tools.

The newly announced NeMo Guardrails microservice further simplifies rail orchestration with API-based interaction and tools for enhanced guardrail management and maintenance.

NeMo Guardrails, along with the other NeMo microservices, enables developers to create data flywheels to continuously optimize generative AI agents, enhancing the overall end-user experience.

See NVIDIA NeMo Guardrails in Action

Implementing AI guardrails to build safe and secure LLM applications.

How NVIDIA NeMo Guardrails Works

AI guardrail orchestration to keep LLM applications secure and on track.

NeMo Guardrails simplifies the orchestration of AI guardrails to include content safety, topic control, PII detection, retrieval-augmented generation (RAG) enforcement, and jailbreak prevention. NeMo Guardrails leverages Colang for designing flexible dialogue flows and is compatible with popular LLMs and frameworks like LangChain. It's a simple, easy-to-use AI application framework that makes AI applications like AI agents, co-pilots, and chatbots safer, customizable, and compliant.

Introductory Blog

Simplify building trustworthy LLM apps with AI guardrails for safety, security, and control.

Documentation

Explore resources for getting started, such as examples, the user guide, security guidelines, evaluation tools, and more.

Example Configurations

The configurations in this folder showcase various features of NeMo Guardrails, e.g., using a specific LLM, enabling streaming, and enabling fact-checking.

Customer Assistant Example

Learn how to integrate advanced content moderation, jailbreak detection, and topic control with NeMo Guardrails NIM microservices.

Ways to Get Started With NVIDIA NeMo Guardrails

Use the right tools and technologies to safeguard AI applications with NeMo Guardrails scalable AI guardrail orchestration platform.

Develop

Get free access to the NeMo Guardrails microservice for research, development, and testing.

Download NowAccess SDK

To use the latest features and source code for adding AI guardrails to LLM applications, NeMo Guardrails is available as an open-source project on GitHub.

Deploy

Get a free license to try NVIDIA AI Enterprise in production for 90 days using your existing infrastructure.

Performance

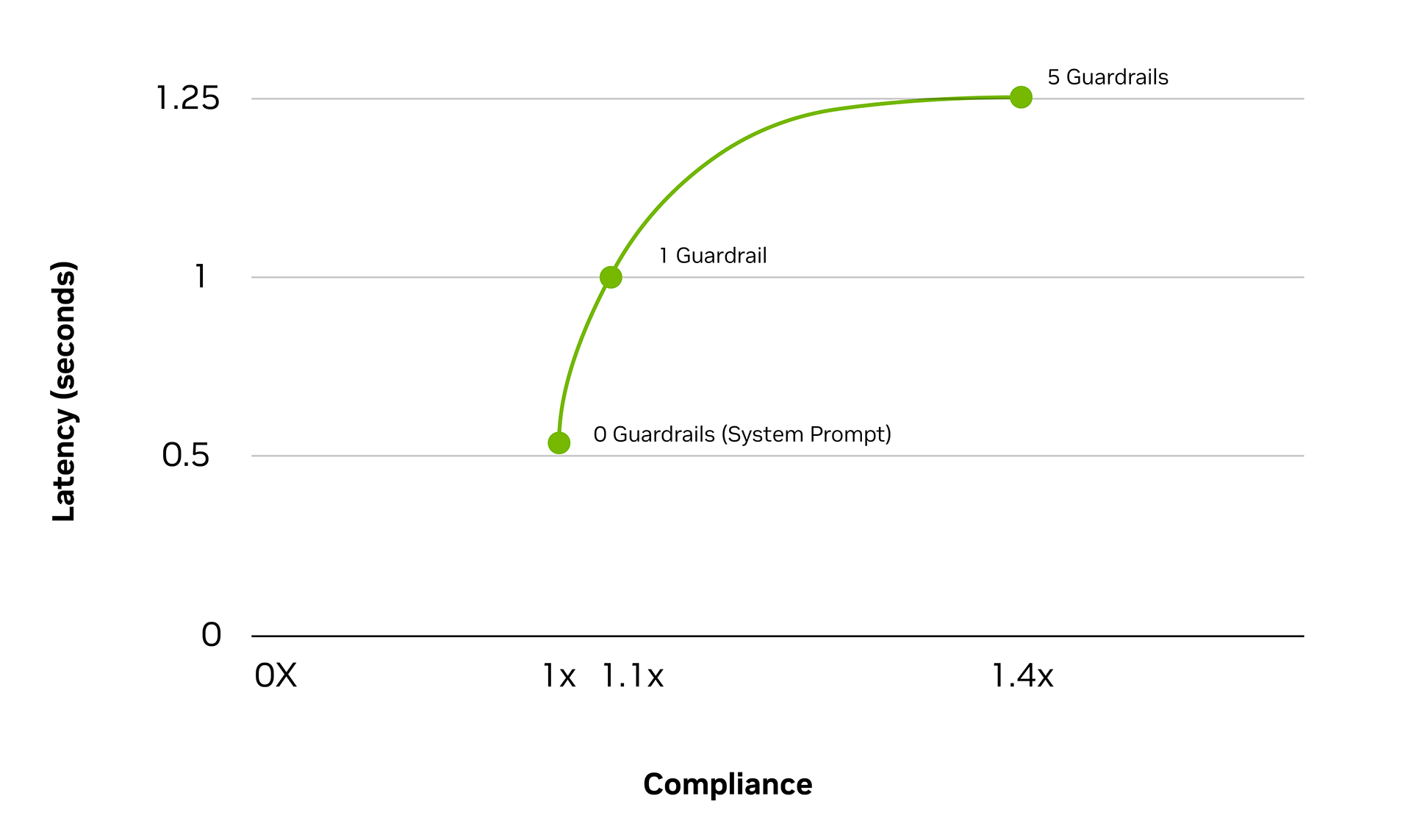

NeMo Guardrails enables AI guardrails to ensure LLM inferences are safe, secure, and compliant. Experience up to 1.5X improvement in compliance rate with a mere half-second of latency. Keep Enterprise AI operations safe and reliable by enforcing custom rules for AI models, agents, and systems. Use prepackaged NVIDIA NIM? microservices that are optimized to make it easier to deploy.

Experience Over 1.4X Improvement in Compliance Rate With Only Half a Second of Latency With NeMo Guardrails

Evaluated Policy Compliance With 5 AI Guardrails

The benchmark represents customizing Llama-3-8B on one 8xH100 80G SXM with sequence packing (4096 pack size, 0.9958 packing efficiency).

On: Customized with NeMo Customizer.

Off: Customized with leading market alternatives.

NVIDIA NeMo Guardrails Learning Library

More Resources

Ethical AI

NVIDIA’s platforms and application frameworks enable developers to build a wide array of AI applications. Consider potential algorithmic bias when choosing or creating the models being deployed. Work with the model’s developer to ensure that it meets the requirements for the relevant industry and use case; that the necessary instruction and documentation are provided to understand error rates, confidence intervals, and results; and that the model is being used under the conditions and in the manner intended.