Description

The DNN Plugin sample loads and runs an MNIST network with a custom implementation of the max pooling layer.

PoolPlugin.cpp implements this layer and defines the functions that are required by DriveWorks to load a plugin. This file is then compiled as a shared library, which is then loaded by sample_dnn_plugin executable at runtime.

Running the Sample

The command line for the sample is:

./sample_dnn_plugin

Accepts the following optional parameters:

./sample_dnn_plugin --tensorRT_model=[path/to/TensorRT/model]

Where:

--tensorRT_model=[path/to/TensorRT/model]

Specifies the path to the NVIDIA? TensorRT?

model file.

The loaded network is expected to have a output blob named "prob".

Default value: path/to/data/samples/dnn/<gpu-architecture>/mnist.bin, where <gpu-architecture> can be `ampere-discrete` or `ampere-integrated`.

Output

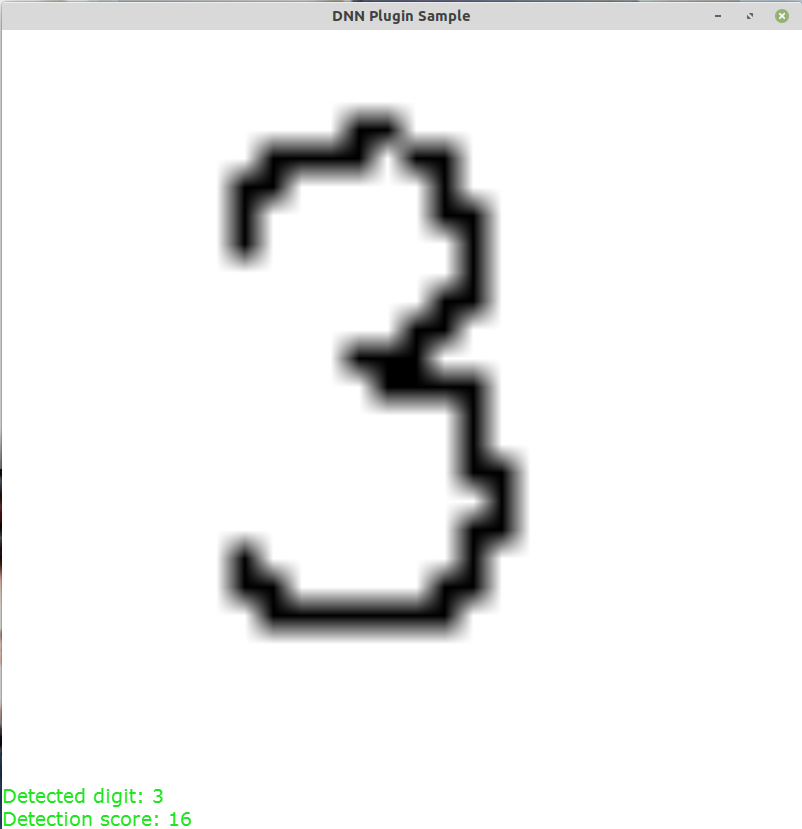

The sample creates a window, displays a white screen where a hand-drawn digit will be recognized via aforementioned MNIST network model.

Digit being recognized using DNN with a plugin

Additional Information

For more information, see DNN Plugins.