Chip to Chip Communication

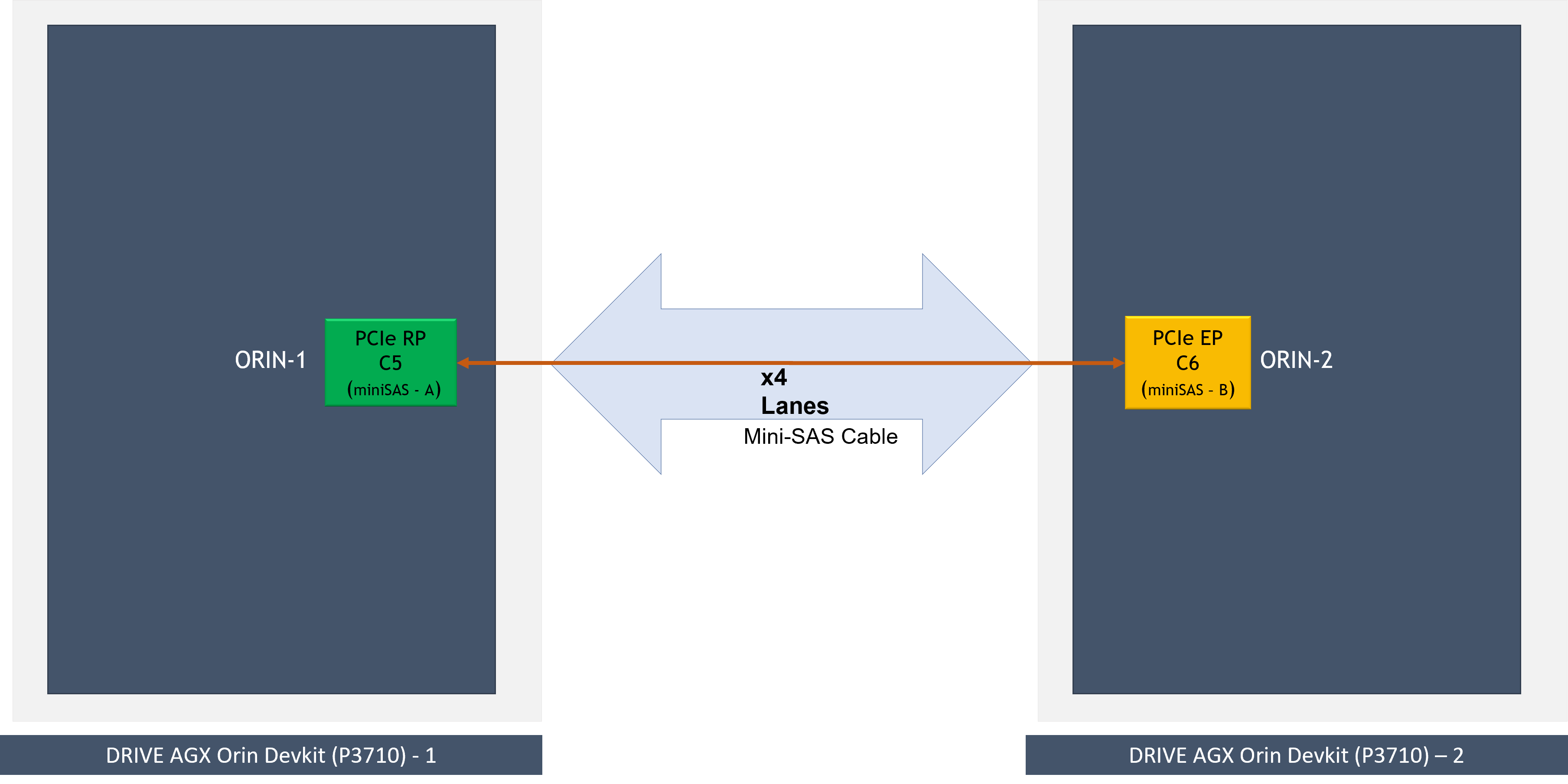

The NVIDIA? Software Communication Interface for Chip to Chip over direct PCIe connection (NvSciC2cPcie) provides the ability for user applications to exchange data across two NVIDIA DRIVE AGX Orin?DevKits interconnected on a direct PCIe connection. The direct PCIe connection is between the first/one NVIDIA DRIVE AGX Orin Developer Kits as a PCIe Root Port with the second/other NVIDIA DRIVE AGX Orin DevKit as a PCIe Endpoint.

Supported Platform Configurations

Platform

- NVIDIA DRIVE? AGX Orin DevKit

- NVIDIA DRIVE Recorder

- NVIDIA DRIVE Orin as PCIe Root Port

- NVIDIA DRIVE Orin as PCIe Endpoint

- NVIDIA DRIVE AGX Orin DevKit as PCIe Root Port <> NVIDIA DRIVE AGX Orin

DevKit as PCIe Endpoint

- NVIDIA DRIVE Recorder Orin A as PCIe Endpoint <> NVIDIA DRIVE Recorder Orin B as Root Port

Platform Setup

The following platform configurations are required for NvSciC2cPcie communication with NVIDIA DRIVE AGX Orin DevKit. Similar connections are required for other platforms.

- miniSAS Port-A of NVIDIA DRIVE AGX Orin DevKit -1 connected to miniSAS Port-B of NVIDIA DRIVE AGX Orin DevKit - 2 with a PCIe miniSAS cable.

- The PCIe controllers of the two NVIDIA DRIVE AGX Orin DevKits when interconnected back-to-back have PCIe re-timers, and the PCIe re-timer firmware must be flashed for the appropriate PCIe lane configuration.

- For custom platform PCIe controllers used, configure lane and clock accordingly.

- Each PCIe contoller in NVIDIA DRIVE AGX Orin Devkit has PCIe EDMA engine.

NvSciC2cPcie uses only one DMA Write channel of the assigned PCIe controller for

all the NvSciC2cPcie transfers.

- NvSciC2cPcie transfer is in the FIFO mechanism, and there is no load balancing or scheduling policy to prioritize the specific request.

Execution Setup

Linux Kernel Module Insertion

NvSciC2cPcie only runs on select platforms: NVIDIA DRIVE AGX Orin DevKit and NVIDIA DRIVE Recorder. Before user applications can exercise NvSciC2cPcie interface, you must insert the Linux kernel modules for NvSciC2cPcie. They are not loaded by default on NVIDIA DRIVE? OS Linux boot. To insert the required Linux kernel module:

- On first/one Orin configured as PCIe Root Port

sudo modprobe nvscic2c-pcie-epc

- On second/other Orin DevKit configured as PCIe Endpoint

sudo modprobe nvscic2c-pcie-epf

A recommendation is to load nvscic2c-pcie-ep* kernel modules immediately after boot. This allows the nvscic2c-pcie software stack to allocate contiguous physical pages for its internal operation for each of the nvscic2c-pcie endpoints configured.

PCIe Hot-Plug

Once loaded, Orin DevKit enabled as PCIe Endpoint is hot-plugged and enumerated as a PCIe device with Orin DevKit configured as PCIe Root Port (miniSAS cable connected to miniSAS port-A). The following must be executed on Orin DevKit configured as PCIe Endpoint (miniSAS cable connected to miniSAS port-B):

sudo -s

cd /sys/kernel/config/pci_ep/

mkdir functions/nvscic2c_epf_22CC/func

echo 0x10DE > functions/nvscic2c_epf_22CC/func/vendorid

echo 0x22CC > functions/nvscic2c_epf_22CC/func/deviceid

ln -s functions/nvscic2c_epf_22CC/func controllers/141c0000.pcie_ep

echo 0 > controllers/141c0000.pcie_ep/start

echo 1 > controllers/141c0000.pcie_ep/startThe previous steps, including Linux kernel module insertion, can be added as a

linux systemd service to facilitate auto-availability of

NvSciC2cPcie software at boot.

NvSciIpc (INTER_CHIP, PCIe) Channels

Once the Linux kernel module insertion and PCIe hot-plug completes successfully, the following NvSciIpc channels are available for use with NvStreams producer or consumer applications.

NVIDIA DRIVE AGX Orin DevKit

| NVIDIA DRIVE AGX Orin DevKit as PCIe Root Port | NVIDIA DRIVE AGX Orin DevKit as PCIe Endpoint |

|---|---|

| nvscic2c_pcie_s0_c5_1 | nvscic2c_pcie_s0_c6_1 |

| nvscic2c_pcie_s0_c5_2 | nvscic2c_pcie_s0_c6_2 |

| nvscic2c_pcie_s0_c5_3 | nvscic2c_pcie_s0_c6_3 |

| nvscic2c_pcie_s0_c5_4 | nvscic2c_pcie_s0_c6_4 |

| nvscic2c_pcie_s0_c5_5 | nvscic2c_pcie_s0_c6_5 |

| nvscic2c_pcie_s0_c5_6 | nvscic2c_pcie_s0_c6_6 |

| nvscic2c_pcie_s0_c5_7 | nvscic2c_pcie_s0_c6_7 |

| nvscic2c_pcie_s0_c5_8 | nvscic2c_pcie_s0_c6_8 |

| nvscic2c_pcie_s0_c5_9 | nvscic2c_pcie_s0_c6_9 |

| nvscic2c_pcie_s0_c5_10 | nvscic2c_pcie_s0_c6_10 |

| nvscic2c_pcie_s0_c5_11 | nvscic2c_pcie_s0_c6_11 |

nvscic2c_pcie_s0_c5_12 and nvscic2c_pcie_s0_c6_12 are not available for use with NvStreams over Chip to Chip connection but only for NvSciIpc over Chip to Chip (INTER_CHIP, PCIe) specifically for short and less-frequent generic-purpose data. For additional information, see NvSciIpc API Usage

NVIDIA DRIVE Recorder

| NVIDIA DRIVE AGX Orin DevKit as PCIe Root Port | NVIDIA DRIVE AGX Orin DevKit as PCIe Endpoint |

|---|---|

| nvscic2c_pcie_s2_c6_1 | nvscic2c_pcie_s1_c6_1 |

| nvscic2c_pcie_s2_c6_2 | nvscic2c_pcie_s1_c6_2 |

| nvscic2c_pcie_s2_c6_3 | nvscic2c_pcie_s1_c6_3 |

| nvscic2c_pcie_s2_c6_4 | nvscic2c_pcie_s1_c6_4 |

| nvscic2c_pcie_s2_c6_5 | nvscic2c_pcie_s1_c6_5 |

| nvscic2c_pcie_s2_c6_6 | nvscic2c_pcie_s1_c6_6 |

| nvscic2c_pcie_s2_c6_7 | nvscic2c_pcie_s1_c6_7 |

| nvscic2c_pcie_s2_c6_8 | nvscic2c_pcie_s1_c6_8 |

| nvscic2c_pcie_s2_c6_9 | nvscic2c_pcie_s1_c6_9 |

| nvscic2c_pcie_s2_c6_10 | nvscic2c_pcie_s1_c6_10 |

| nvscic2c_pcie_s2_c6_11 | nvscic2c_pcie_s1_c6_11 |

NvSciIpc (INTER_CHIP, PCIe) channel names can be modified according to convenience.

The user application on NVIDIA DRIVE AGX Orin DevKit as PCIe Root Port opens

nvscic2c_pcie_s0_c5_1for use, then the peer user application on

the other NVIDIA DRIVE AGX Orin DevKit as PCIe Endpoint must open

nvscic2c_pcie_s0_c6_1 for exchange of data across the SoCs and

for the remaining channels listed previously. Similarly, for other platforms,

endpoints must open respective channels as per the previous channels table.

Each of the NvSciIpc (INTER_CHIP, PCIe) channels are configured to have 16 frames with 32 KB per frame as the default.

Reconfiguration

The following reconfiguration information is based on the default NvSciC2cPcie support offered for NVIDIA DRIVE AGX Orin DevKit.

Different platforms or a different PCIe controller configuration on the same NVIDIA

DRIVE AGX Orin DevKit requires adding a new set of device-tree node entries for

NvSciC2cPcie on a PCIe Root Port (nvidia,tegra-nvscic2c-pcie-epc)

and a PCIe Endpoint (nvidia,tegra-nvscic2c-pcie-epf). For example,

a change in PCIe Controller Id or a change in the role of a PCIe controller from

PCIe Root Port to PCIe Endpoint (or vice versa) from the default NVIDIA DRIVE AGX

Orin DevKit requires changes. The changes are possible with device-tree node changes

or additions, but it is not straightforward to document them all. These are one-time

changes and can occur in coordination with your NVIDIA point-of-contact.

BAR Size

BAR size for NVIDIA DRIVE AGX Orin as PCIe Endpoint is configured to 1 GB by default.

When required, this can be reduced or increased by modifying the property

nvidia,bar-win-size of device-tree node:

nvscic2c-pcie-s0-c6-epf

File:

<PDK_TOP>/drive-linux/kernel/source/hardware/nvidia/platform/t23x/automotive/kernel-dts/p3710/common/tegra234-p3710-0010-nvscic2c-pcie.dtsi

nvscic2c-pcie-s0-c6-epf {

compatible = "nvidia,tegra-nvscic2c-pcie-epf";

-- nvidia,bar-win-size = <0x40000000>; /* 1GB. */

++ nvidia,bar-win-size = <0x20000000>; /* 512MB. */

};The configured BAR size must be a power-of 2 and a minimum of 64 MB. Maximum size of BAR depends on the size of pre-fetchable memory supported by PCIe RP. With NVIDIA DRIVE AGX, max BAR size should be 0xf000 less than maximum pre-fetchable memory.

NvSciIpc (INTER_CHIP, PCIe) Channel Properties

The NvSciIpc (INTER_CHIP, PCIe) channel properties can be modified on a use-case basis.

Modify Channel Properties

To change the channel properties frames and frame size, must change for

both NVIDIA DRIVE AGX Orin DevKit as PCIe Root Port and NVIDIA DRIVE AGX Orin DevKit

as PCIe Endpoint device-tree nodes: nvscic2c-pcie-s0-c5-epc and

nvscic2c-pcie-s0-c6-epf respectively.

file:

<PDK_TOP>/drive-linux/kernel/source/hardware/nvidia/platform/t23x/automotive/kernel-dts/p3710/common/tegra234-p3710-0010-nvscic2c-pcie.dtsi

The following illustrates change in frames count or number of frames for NvSciIpc (INTER_CHIP, PCIe) channel: nvscic2c_pcie_s0_c5_2 (PCIe Root Port) and nvscic2c_pcie_s0_c6_1(PCIe Endpoint)

nvscic2c-pcie-s0-c5-epc {

nvidia,endpoint-db =

"nvscic2c_pcie_s0_c5_1, 16, 00032768",

-- "nvscic2c_pcie_s0_c5_2, 16, 00032768",

++ "nvscic2c_pcie_s0_c5_2, 08, 00032768",

"nvscic2c_pcie_s0_c5_3, 16, 00032768",

…..

};

nvscic2c-pcie-s0-c6-epf {

nvidia,endpoint-db =

"nvscic2c_pcie_s0_c6_1, 16, 00032768",

-- "nvscic2c_pcie_s0_c6_2, 16, 00032768",

++ "nvscic2c_pcie_s0_c6_2, 08, 00032768",

"nvscic2c_pcie_s0_c6_3, 16, 00032768",

…..

};

The following illustrates change in frame size for NvSciIpc (INTER_CHIP, PCIe) channel: nvscic2c_pcie_s0_c5_2 (PCIe Root Port) and nvscic2c_pcie_s0_c6_1(PCIe Endpoint)

nvscic2c-pcie-s0-c5-epc {

nvidia,endpoint-db =

"nvscic2c_pcie_s0_c5_1, 16, 00032768",

-- "nvscic2c_pcie_s0_c5_2, 16, 00032768",

++ "nvscic2c_pcie_s0_c5_2, 16, 00028672",

"nvscic2c_pcie_s0_c5_3, 16, 00032768",

…..

};

nvscic2c-pcie-s0-c6-epf {

nvidia,endpoint-db =

"nvscic2c_pcie_s0_c6_1, 16, 00032768",

-- "nvscic2c_pcie_s0_c6_2, 16, 00032768",

++ "nvscic2c_pcie_s0_c6_2, 16, 00028672",

"nvscic2c_pcie_s0_c6_3, 16, 00032768",

…..

};

New Channel Addition

To introduce additional NvSciIpc (INTER_CHIP, PCIe) channels, the change must occur

for both NVIDIA DRIVE AGX Orin DevKit as PCIe Root Port and NVIDIA DRIVE AGX Orin

DevKit as PCIe Endpoint device-tree nodes: nvscic2c-pcie-s0-c5-epc

and nvscic2c-pcie-s0-c6-epf respectively.

File:

<PDK_TOP>/drive-linux/kernel/source/hardware/nvidia/platform/t23x/automotive/kernel-dts/p3710/common/tegra234-p3710-0010-nvscic2c-pcie.dtsi

nvscic2c-pcie-s0-c5-epc {

nvidia,endpoint-db =

"nvscic2c_pcie_s0_c5_1, 16, 00032768",

……

-- "nvscic2c_pcie_s0_c5_11, 16, 00032768";

++ "nvscic2c_pcie_s0_c5_11, 16, 00032768",

++ "nvscic2c_pcie_s0_c5_12, 16, 00032768";

};

nvscic2c-pcie-s0-c6-epf {

nvidia,endpoint-db =

"nvscic2c_pcie_s0_c6_1, 16, 00032768",

……

-- "nvscic2c_pcie_s0_c6_11, 16, 00032768";

++ "nvscic2c_pcie_s0_c6_11, 16, 00032768",

++ "nvscic2c_pcie_s0_c6_12, 16, 00032768";

};File: /etc/nvsciipc.cfg(on target)

INTER_CHIP_PCIE nvscic2c_pcie_s0_c5_11 0000

++ INTER_CHIP_PCIE nvscic2c_pcie_s0_c5_12 0000

…..

…..

…..

INTER_CHIP_PCIE nvscic2c_pcie_s0_c6_11 0000

++ INTER_CHIP_PCIE nvscic2c_pcie_s0_c6_12 0000Changes can be made to reduce, subtract, or remove any of the existing NvSciIpc (INTER_CHIP, PCIe) channels.

For a given pair of NVIDIA DRIVE AGX Orin DevKit as PCIe Root Port and NVIDIA DRIVE AGX Orin DevKit as PCIe Endpoint, the maximum NvSciIpc (INTER_CHIP, PCIe) channels supported are 16.

Similarly for other platforms, corresponding *dtsi files require modification.

For NVIDIA DRIVE Recorder:

<PDK_TOP>/drive-linux/kernel/source/hardware/nvidia/platform/t23x/automotive/kernel-dts/p4024/common/tegra234-p4024-nvscic2c-pcie.dtsi

- Frame count: minimum: 1, maximum: 64

- Frame size: minimum: 64B, maximum: 32 KB. Must always be aligned to 64B

PCIe Hot-Unplug

To tear down the connection between PCIe Root Port and PCIe Endpoint, PCIe hot-unplug PCIe Endpoint from PCIe Root Port. Refer to the Restrictions section for more information.

The PCIe Hot-Unplug is always executed from PCIe Endpoint [NVIDIA DRIVE AGX Orin DevKit (miniSAS cable connected to miniSAS port-B)] by initiating the power-down off the PCIe Endpoint controller and subsequently unbinding the nvscic2c-pcie-epf module with the PCIe Endpoint.

Prerequisite: PCIe Hot-Unplug must be attempted only when the PCIe Endpoint is successfully hot-plugged into PCIe Root Port and NvSciIpc(INTER_CHIP, PCIE) channels are enumerated.

To PCIe hot-unplug, execute the following on NVIDIA DRIVE AGX Orin DevKit configured as PCIe Endpoint (miniSAS cable connected to miniSAS port-B). This makes NvSciIpc(INTER_CHIP, PCIE) channels disappear on both the PCIe inter-connected NVIDIA DRIVE AGX Orin DevKits.

sudo -s

cd /sys/kernel/config/pci_ep/

Check NvSciC2cPcie device nodes are available:

ls /dev/nvscic2c_*The previous command should list NvSciC2cPcie device nodes for the corresponding PCIe Root Port and PCIe Endpoint connection. Continue with the following set of commands:

echo 0 > controllers/141c0000.pcie_ep/startWait until NvSciC2cPcie device nodes disappear. The following command can be used to check for NvSciC2cPcie device nodes availability:

ls /dev/nvscic2c_*Once the device nodes disappear, execute:

unlink controllers/141c0000.pcie_ep/funcSuccessful PCIe hot-unplug of PCIe Endpoint from PCIe Root Port makes the NvSciIpc(INTER_CHIP, PCIE) channels as listed, NvSciIpc (INTER_CHIP, PCIe) channels, go away on both the NVIDIA DRIVE AGX Orin DevKits, and you can proceed with power-cycle/off of one or both the NVIDIA DRIVE AGX Orin DevKits.

PCIe Hot-Replug

To re-establish the PCIe connection between PCIe Endpoint and PCIe Root Port, you must PCIe hot-replug PCIe Endpoint to PCIe Root Port.

When both the SoCs are power-cycled after PCIe hot-unplug previously, you must follow the usual steps of PCIe hot-plug. However, if one of the two SoCs power-cycled/rebooted, then PCIe hot-replug is required to re-establish the connection between them.

Prerequisite: PCIe hot-replug is attempted when one of the two SoCs is power-recycled/rebooted after a successful attempt of PCIe hot-unplug between them. If both SoCs were power-recycled/rebooted, then the same steps as listed in the Execution Setup section are required to establish the PCIe connection between them.

For platforms that do not have PCIe retimers, to achieve PCIe hot-replug after the connection was PCIe Hot-Unplugged before NVIDIA DRIVE AGX Orin DevKit has rebooted, execute the following on NVIDIA DRIVE AGX Orin DevKit configured as PCIe Endpoint (miniSAS cable connected to miniSAS port-B). This makes NvSciIpc(INTER_CHIP, PCIE) endpoints reappear on both the PCIe inter-connected NVIDIA DRIVE AGX Orin DevKits.

When only PCIe Root Port SoC was power-recycled/rebooted

On PCIe Root Port SoC (NVIDIA DRIVE AGX Orin DevKit (miniSAS cable connected to miniSAS port-A))

Follow the same steps as listed in Linux Kernel Module Insertion, Execution Setup.

sudo -s

cd /sys/kernel/config/pci_ep/

ln -s functions/nvscic2c_epf_22CC/func controllers/141c0000.pcie_ep

echo 0 > controllers/141c0000.pcie_ep/start

echo 1 > controllers/141c0000.pcie_ep/startWhen only PCIe Endpoint SoC is power-recycled/rebooted

On PCIe Endpoint SoC (NVIDIA DRIVE AGX Orin DevKit (miniSAS cable connected to miniSAS port-B)

Follow the steps Execution Setup.

On PCIe Root Port SoC (NVIDIA DRIVE AGX Orin DevKit (miniSAS cable connected to miniSAS port-A)

Nothing is required. The module is already inserted.

SoC Error

The only scenario for SoC Error is when one or both of the PCIe Root Port SoC and PCIe Endpoint SoC connected with nvscic2c-pcie has linux-kernel oops/panic. The application might observe timeouts.

Reconnection

On the SoC that is still functional and responsive, user must follow the same restrictions for PCIe Hot-Unplug. On the same SoC, once the applications exit or pipeline is purged, user must recover the faulty SoC either by rebooting or resetting it.

- If the functional SoC (non-faulty) was PCIe Endpoint SoC, then same steps as for ‘PCIe Hot-Unplug’ and ‘PCIe Hot-Replug’ listed above on PCIe Endpoint SoC and on the recovered SoC (PCIe Root Port SoC) user must do the same steps as listed in sub-section ‘Linux Kernel Module Insertion’

- If the functional SoC (non-faulty) was PCIe Root Port SoC, then nothing is to be done on that SoC, but on the recovered SoC (PCIe Endpoint SoC) user must do the same steps as listed in the PCIe Hot-Plug section.

- If both the SoC’s were faulty, then on recovering each of the two SoCs, it

becomes the usual case of ‘Linux kernel Module Insertion’ and ‘PCIe

Hot-Plug’ as done to establish the PCIe connection between them

initially.

Successful Error Recovery and PCIe reconnection makes the Channels reappear/available again for use.

PCIe Error

Case 1: PCIe Link Errors (AER)

PCIe AER resulting on either PCIe Root Port SoC or PCIe Endpoint SoC are always reported on PCIe Root Port. Only PCIe Uncorrectable AER(s) are reported.

Recovery- Perform steps for 'PCIe Hot-Unplug' and then 'PCIe Hot-Replug' listed above. Successful PCIe reconnection (PCIe Hot-Unplug/Replug) makes the Channels reappear/available again for use.

Case 2: PCIe EDMA Transfer Errors

PCIe EDMA transfer errors can lead to data loss.

Recovery- PCIe EDMA engine is sanitized once all pipelined tranfers are

returned. Recovery from PCIe EDMA errors is not guaranteed and therefore it is

recommended to retry streaming and if error persist PCIe link recovery would be

required.

For PCIe link recovery, 'PCIe Hot-Unplug' and then 'PCIe Hot-Replug' is required, as listed above on PCIe Endpoint SoC.

SC-7 Suspend and Resume Cycle

Follow the same set of restrictions and assumptions for SC-7 suspend and resume cycle as listed in the PCIe Hot-Unplug and PCIe Hot-Replug sections. Before one or both the two interconnected SoCs enter SC-7 suspend, PCIe Hot-Unplug must be carried out keeping the set of restrictions applicable for PCIe Hot-Unplug. Once one or both the two interconnected SoCs exit from SC-7 suspend, such as SC-7 resume, the same steps as listed in PCIe Hot-Replug are required.

Assumptions

- NVIDIA Software Communication Interface for Chip to Chip (NvSciC2cPcie) is offered only between the inter-connected NVIDIA DRIVE Orin SoC as PCIe Root Port and a NVIDIA DRIVE Orin SoC as PCIe Endpoint. Producer buffers are copied onto remote consumer buffers pinned to PCIe memory using the PCIe eDMA engine.

- NVIDIA Software Communication Interface for Chip to Chip (NvSciC2cPcie) is offered from a single Guest OS Virtual Machine of a NVIDIA DRIVE Orin SoC as PCIe Root Port to a single Guest OS Virtual Machine of another NVIDIA DRIVE Orin SoC as PCIe Endpoint.

- User-applications are responsible for the steps to teardown the ongoing Chip to Chip transfer pipeline on all the SoCs in synergy and gracefully.

- Out of the box support is ensured for NVIDIA DRIVE AGX Orin DevKit

inter-connected with another NVIDIA DRIVE AGX Orin DevKit. In this

configuration, the default configuration is PCIe controller C5 in PCIe Root Port

mode and PCIe controller C6 in PCIe Endpoint mode. Any change in PCIe controller

mode or by moving to another set of PCIe controllers for NvSciC2cPcie requires

changes in the

tegra234-p3710-0010-nvscic2c-pcie.dtsidevice-tree include file.

Restrictions

- Before powering-off/recycling one of the two PCIe inter-connected NVIDIA DRIVE AGX Orin DevKits when one NVIDIA DRIVE AGX Orin DevKit is PCIe hot-plugged into another NVIDIA DRIVE AGX Orin DevKit, you must tear down the PCIe connection between them (PCIe hot-unplug).

- Before tearing down the PCIe connection between the two SoCs (PCIe hot-unplug), on

both of these SoCs, all applications or streaming pipelines using the corresponding

NvSciIpc(INTER_CHIP, PCIE) channels will exit or purge. Before they exit or purge,

the corresponding in-use NvSciIpc(INTER_CHIP, PCIE) channel must be closed with

NvSciIpcCloseEndpointSafe(). -

On the two PCIe inter-connected NVIDIA DRIVE AGX Orin DevKits, before closing a corresponding NvSciIpc(INTER_CHIP, PCIE) channel with

NvSciIpcCloseEndpointSafe(), you must ensure for this NvSciIpc(INTER_CHIP, PCIE) channel:- No pipelined NvSciSync waits are pending.

- All the NvSciIpc (INTER_CHIP, PCIE) channel messages sent have been received.

- All the NvSciBuf and NvSciSync, source and target handles, export and import handles, registered and CPU mapped, with NvSciC2cPcie layer must be unregistered and their mapping deleted with NvSciC2cPcie layer by invoking the relevant NvSciC2cPcie programming interfaces.

- Unloading of NvSciC2cPcie Linux kernel modules is not supported.

- Error-handling of NvSciC2cPcie transfers other than PCIe AER and PCIe EDMA transfer error, leads to timeouts in the software layers exercising NvSciC2cPcie.

- For NVIDIA Drive Recorder, C2C verification occurred by following boot order sequence - Orin-A followed by Orin-B

- Chip to Chip communication accepts a maximum of 1022 NvSciBufObects and 1022 NvSciSyncObjects for NvStreams over Chip to Chip communication permitting system limits.