- Welcome

- Getting Started With the NVIDIA DriveWorks SDK

- Modules

- Samples

- Tools

- Tutorials

- SDK Porting Guide

- DriveWorks API

- More

The NVIDIA® DriveWorks SDK does not constrain the use of third-party sensors with higher-level modules as long as they output the defined data structures, such as dwLidarDecodedPacket. In certain use-cases, users may bypass the sensor abstraction layer (SAL) and interface directly with the respective modules.

However, the SDK also provides flexible options for tightly integrating custom autonomous vehicle sensors with the SAL. It is recommended to employ one of the methods in this section as it provides the following benefits:

Grouping camera sensors to initialize, sort, and deliver sensor data.For background, the SAL implements sensor functionality in two modular layers:

Enables the full integration of custom sensors (with the exception of cameras) into the DriveWorks Sensor Abstraction Layer.

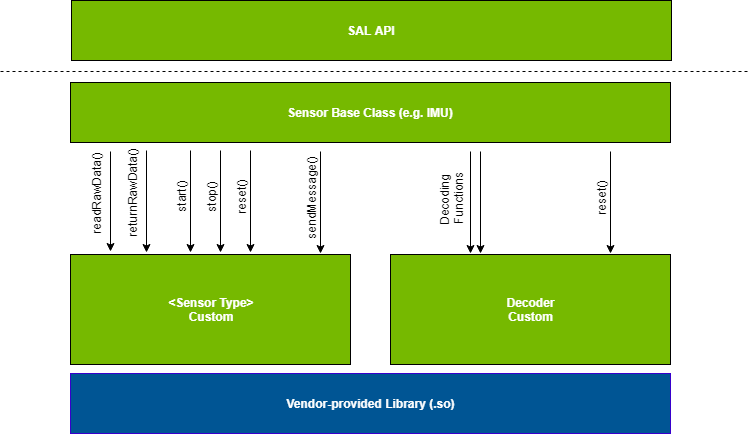

The developer implements the sensor (communication plane) and device-specific decoder functions and compiles this plugin as a shared object file (.so). DriveWorks provides the hooks for the instantiation of these custom sensor plugins.

The following diagram illustrates this architecture.

The following sensors are currently supported:

Enables the integration of custom ethernet-based lidars and radars with the DriveWorks Sensor Abstraction Layer.

While it is generally recommended that the Comprehensive Sensor Plugin Framework is used for sensor integration as it provides the most flexibility to the developer, the Lidar & Radar Decoder Plugins may be useful in situations that only require sensor data ingestion and not sensor control.

Assumes transport layer is TCP/UDP ethernet-based channels.

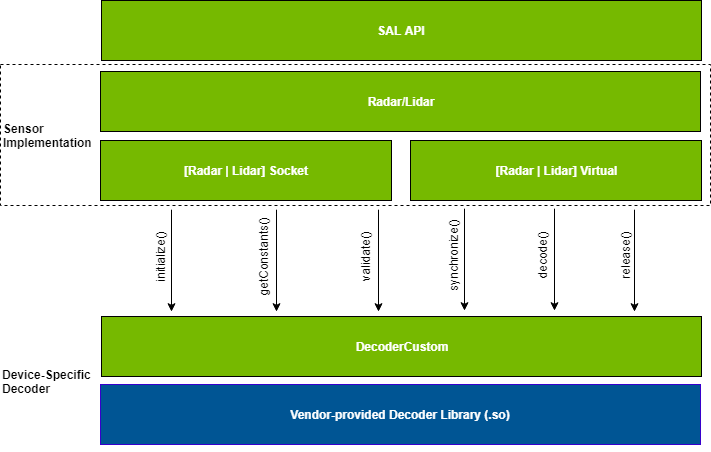

DriveWorks provides the sensor implementation and manages the sensor interfaces. The developer implements device-specific decoder functions that parse and interpret raw sensor data into DriveWorks data structures and compiles this plugin as a shared object file (.so). DriveWorks provides the hooks for the instantiation of these custom decoder plugins.

The following diagram illustrates this architecture. The socket interface is for real data, and the virtual interface is for data recorded with the socket interface.

The following sensors are currently supported:

Enables the integration of GMSL cameras supported by third parties, by leveraging the NvMedia Sensor Input Processing Library (SIPL).

Assumes that the developer has implemented or otherwise acquired the SIPL-based camera drivers and associated tuning files and installed them per NvMedia guidelines.

For more information, please refer to Custom Cameras (SIPL).